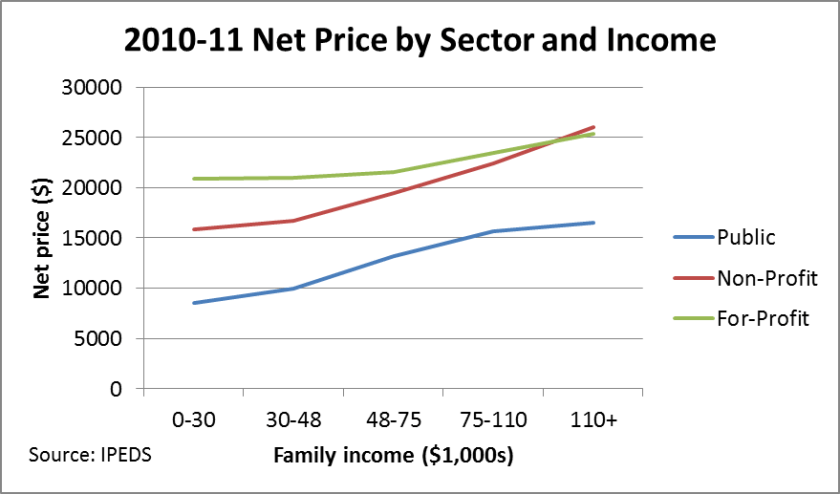

I am thrilled to see more researchers and policymakers taking advantage of the net price data (the cost of attendance less all grant aid) available through the federal IPEDS dataset. This data can be used to show colleges which do a good job keeping the out-of-pocket cost low either to all students who receive federal financial aid, or just students from the lowest-income families.

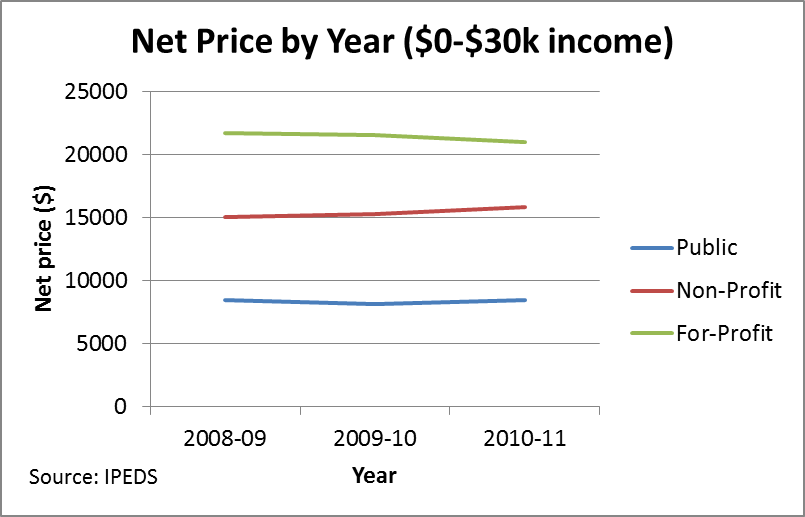

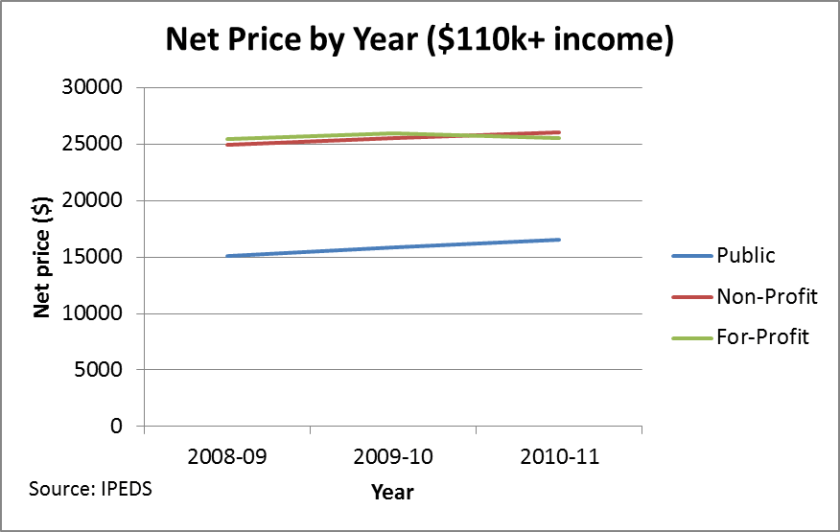

Stephen Burd of the New America Foundation released a fascinating report today showing the net prices for the lowest-income students (with household incomes below $30,000 per year) in conjunction with the percentage of students receiving Pell Grants. The report lists colleges which are successful in keeping the net price low for the neediest students while enrolling a substantial proportion of Pell recipients along with colleges that charge relatively high net prices to a small number of low-income students.

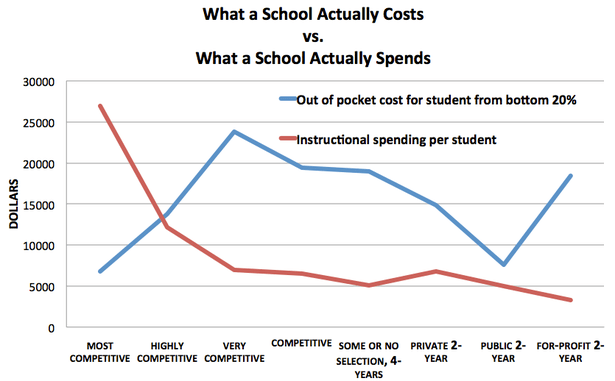

The report advocates for more of a focus on financially needy students and a shift to more aid based on financial need instead of academic qualifications. Indeed, the phrase “merit aid” has fallen out of favor in a good portion of the higher education community. An example of this came at last week’s Education Writers Association conference, where many journalists stressed the importance of using the phrase “non-need based aid” instead of “merit aid” to change the public’s perspective on the term. But regardless of the preferred name, giving aid based on academic characteristics is used to attract students with more financial resources and to stay toward the top of prestige-based rankings such as U.S. News and World Report.

While a great addition to the policy debate, the report deserves a substantial caveat. The measure of net price for low-income students only does include students with a household income below $30,000. This does not perfectly line up with Pell recipients, who often have household incomes around $40,000 per year. Additionally, focusing on just the lowest income bracket can result in a small number of students being used in the analysis. In the case of small liberal arts colleges, the net price may be based on fewer than 100 students. It can also result in ways to game the system by charging much higher prices to families making just over $30,000 per year—a potentially undesirable outcome.

As an aside, I’m defending my dissertation tomorrow, so wish me luck! I hope to get back to blogging somewhat more frequently in the next few weeks.